The rapid evolution of artificial intelligence (AI) has placed unprecedented demands on data center infrastructure, particularly in cabling systems. Modern AI data centers must balance ultra-high bandwidth, sub-microsecond latency, and energy efficiency to support the massive computational workloads of AI models. However, achieving this equilibrium requires overcoming significant cabling challenges, including managing skyrocketing speed and density requirements, maintaining signal integrity at scale, and ensuring flexibility for rapid expansion.

The Three Core Challenges of AI Data Center Cabling

1. Ultra-High Speed and Density Demands

AI data centers deploy dense clusters of GPU/TPU processors within racks to handle real-time AI inference tasks. Links must operate at speeds ranging from 100G to 400G, with some configurations exceeding 800G. For instance, NVIDIA’s DGX H100 servers feature eight 400G storage ports and four 800G switch ports, while a single DGX SuperPOD can aggregate 384 400G fiber links across 18 switches.

2. Signal Integrity at Scale

At 400G/800G speeds, even minor interference can cause signal degradation, latency, or loss. For example, Meta’s LLM AI clusters demand microsecond-level synchronization during training; disruptions here directly impact user experience. Traditional cabling struggles to mitigate attenuation at these speeds, necessitating ultra-low-loss (ULL) solutions to preserve signal fidelity.

3. Scalability and Flexibility for Rapid Expansion

AI clusters evolve dynamically—Meta’s Llama 3 training involved over 10,000 GPUs. Legacy point-to-point cabling lacks adaptability, requiring costly rewiring during expansions. This rigidity increases operational costs and complicates management, highlighting the need for modular, future-proof architectures.

Strategic Fiber Cabling Optimizations for AI Workloads

1. Prioritize Cost-Effective, High-Density Fiber

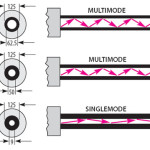

Most GPU-to-GPU connections span under 100 meters, making parallel fiber optics (e.g., MPO cables) more economical than legacy duplex solutions. For example, 400G-DR4 transceivers with eight-fiber MPO cables reduce costs compared to 400G-FR4 alternatives. High-density MPO-12/24 connectors further amplify space efficiency, offering 4x to 8x higher density per rack unit (RU) than traditional cables.

2. Adopt Structured, Modular Cabling

Structured cabling systems integrate transceivers, fiber cables, and management tools to enable incremental growth without infrastructure overhauls. Unlike rigid active optical cables (AOCs), which require full replacement during upgrades, modular designs support seamless hardware additions, reducing downtime and complexity.

3. Deploy Ultra-Low-Loss Components for Critical Links

Not all fibers are equal for AI workloads. ULL fiber cables minimize attenuation, ensuring compliance with 400G/800G standards. Paired with advanced connectors (e.g., APC-polished ends to reduce back reflection), these solutions guarantee reliable high-speed transmission.

Conclusion: Building AI-Ready Infrastructure

As AI workloads grow, data centers must adopt cabling strategies that prioritize speed, density, and adaptability. By leveraging high-density fiber, modular architectures, and ULL components, operators can future-proof their infrastructure while minimizing costs and complexity. The right cabling choices are no longer optional; they are foundational to unlocking AI’s full potential.